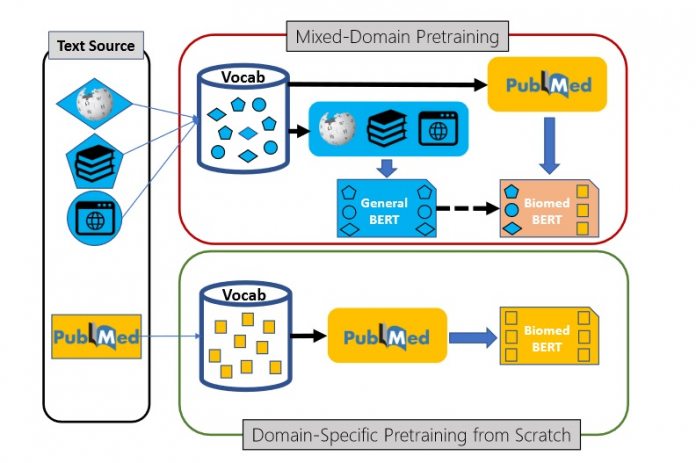

Specifically, Microsoft Research says the results were better than previously seen. According to the team, the AI could classify documents, extract evidence-based medical information, recognize named entities, and more. Training NLP models for a specific role, such as in biomedicine, research indicated using domain-specific data provides accuracy. Microsoft wanted to extend this potential by tweaking the concept behind AI training. While domain-specific data is accurate, previous testing worked on the assumption “out-of-domain” data was also useful. Microsoft Research thought this was incorrect because mixing domain pretraining data is less accurate. For their new pretraining model, the team shows domain-specific pretraining on its own outperforms the generic mixed domain pretraining. “To facilitate this study, we compile a comprehensive biomedical NLP benchmark from publicly available datasets and conduct in-depth comparisons of modeling choices for pretraining and task-specific fine-tuning by their impact on domain-specific applications. Our experiments show that domain-specific pretraining from scratch can provide a solid foundation for biomedical NLP, leading to new state-of-the-art performance across a wide range of tasks.”

Training

Like any AI training, evaluating the process was important. Microsoft Research generates a training model using vocabulary from the latest PubMed document dataset. This includes 14 million abstracts and 3.2 billion words, weighing 21GB. Using a single Nvidia DGX-2 powered machine with 16 V100 GPUs, training took five days. Built on Google’s BERT, the new model is called PubMedBERT and consistently outperforms similar AI in terms of biomedical NLP learning. “We show that domain-specific pretraining from scratch substantially outperforms continual pretraining of generic language models, thus demonstrating that the prevailing assumption in support of mixed-domain pretraining is not always applicable.”