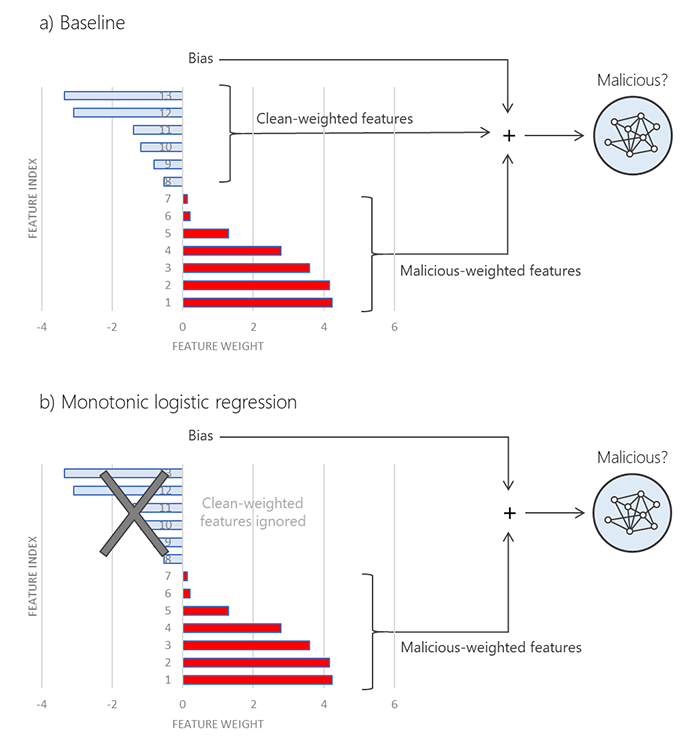

“Most machine learning models are trained on a mix of malicious and clean features. Attackers routinely try to throw these models off balance by stuffing clean features into malware,” explains the company. “Monotonic models are resistant against adversarial attacks because they are trained differently: they only look for malicious features. The magic is this: Attackers can’t evade a monotonic model by adding clean features. To evade a monotonic model, an attacker would have to remove malicious features.” Monotonic models were notably explored by UC Berkley researchers last summer, and Microsoft says it deployed them shortly after. Its use of techniques has helped in a number of situations, the most notable being fighting the LockerGaga ransomware. For the unfamiliar, LockerGoga has been used in a number of targetted attacks against infrastructure. Most notably, reported attacks against Altran technologies, Norsk Hydro, Hexion, and Momentive previously hit the news.

The defining threat from LockerGoga is the fact it was signed with a valid certificate. This is one of ‘clean’ vectors Microsoft is talking about. Due to the valid certificate, it’s more likely to be deployed on a victim’s machine without them noticing. With ATP, Microsoft sends a featured description of the file to its cloud protection service, which classifies it in real-time. This is done through an array of machine learning classifiers that don’t take any certificates into account.

![]()